TL;DR

A mid-sized e-commerce company needed a production-ready, multi-region web platform deployed on Azure, and they needed it fast.

Their infra team was at capacity, and the deadline was two weeks.

Using Agentic InfraOps, I handed the job to AI agents: from requirements gathering to Bicep deployment and validation.

The result: fully documented, compliant infrastructure built in 13 hours, with 92% alignment to the Azure Well-Architected Framework and 85% time savings.

Challenge Accepted

When a mid-sized e-commerce company approached us with a familiar infrastructure challenge, I knew I’m going to like it.

The ask itself wasn’t exotic:

- Multi-region web platform on Azure

- App Service in West Europe and North Europe

- Azure SQL with geo-replication

- CDN for static assets

- Application Insights for monitoring

The problem was everything around it.

The customer’s infrastructure team was already stretched thin keeping existing systems alive.

They still needed something production-ready, auditable, and aligned with the Azure Well-Architected Framework, and they needed it within two weeks.

This is the kind of work that usually dies in meetings before it ever reaches production.

I Didn’t Use AI as a Copilot. I Used It as an Infra Team

Instead of asking AI to “help me write Bicep,” I used Agentic InfraOps to run the entire delivery lifecycle (Link to the repo).

This matters.

Agentic InfraOps isn’t about autocomplete.

It’s about specialized agents, each owning a phase of the work, validating their output, and handing it off cleanly, just like a disciplined engineering team would.

No shortcuts. No magic prompts. Just structured execution.

Agentic InfraOps Works Because Each Agent Has One Job

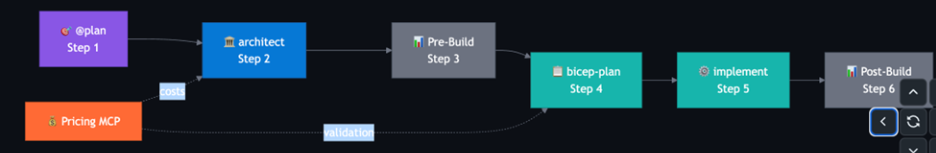

Agentic InfraOps coordinates multiple GitHub Copilot agents across a six-step workflow, with guardrails baked in.

The framework includes:

- Multi-agent orchestration in GitHub Copilot

- Azure Pricing MCP server for real-time cost feedback during design

- Azure Verified Modules for compliant building blocks

- Continuous Well-Architected Framework assessment

The key idea: design decisions happen before code exists, and cost reality is surfaced early, not during post-mortems.

Step 1: I Let the @plan Agent Argue With My Requirements

I started with a simple prompt in VS Code:

Create a multi-region web application platform with App Service, Azure SQL geo-replication, CDN, Application Insights, support for 10,000 concurrent users, and a €3000/month budget.

The @plan agent didn’t just accept it.

It pushed back.

It asked about:

- Traffic patterns

- Data residency

- Disaster recovery objectives

- Compliance expectations

Then it did something useful: it told me the budget didn’t match the ambition.

Key outcome: I was forced to prioritize early.

That single interaction prevented weeks of downstream redesign.

Step 2: Architecture Happened Before Code, on Purpose

The azure-principal-architect agent evaluated the requirements without writing any infrastructure code.

That separation matters more than people think.

The agent walked through all five Well-Architected pillars and surfaced concrete recommendations:

- Front Door instead of CDN for reliability

- Private Endpoints and Key Vault for security

- Elastic pools for SQL to stay inside budget

- Monitor workbooks and alerting for ops

- Caching and autoscaling for performance

With live pricing data plugged in, it became obvious that our initial SQL choices would burn 70% of the monthly budget.

We fixed that before a single resource was deployed.

Step 3: Documentation Was Generated Before Anyone Asked for It

Before implementation, the workflow produced:

- Architecture diagram (Mermaid)

- Architecture Decision Records

- Cost breakdown by resource

- Security baseline assessment

This wasn’t paperwork for its own sake.

The diagram made the design legible to leadership.

The ADRs created an audit trail explaining why decisions were made.

This is usually the stuff teams promise to write “later.”

Here, it existed by default.

Step 4: The Plan Caught a Front Door Failure Before It Happened

The bicep-plan agent built an implementation roadmap without writing Bicep yet.

It defined:

- Naming conventions

- Deployment order

- RBAC and managed identities

- Governance policies

- Validation strategy

Critical catch: it flagged that Azure Front Door origin group configuration was more complex than the initial design assumed.

That correction happened in planning, not during a failed deployment window.

Step 5: Bicep Was Generated, Not Guessed

Only after the plan was validated did the bicep-implement agent generate code.

The output included:

- Modular Bicep using Azure Verified Modules where possible

- Parameter files for dev, staging, and prod

- Pre-flight validation scripts

- Azure Policy integration

About 800 lines of Bicep, split cleanly across modules.

No naming drift.

No missing tags.

No overly broad permissions.

It also generated post-deployment validation tests to confirm the environment matched expectations.

Step 6: The “Afterthought” Artifacts Were Done First

Once the infrastructure was reviewed, the final artifacts dropped out automatically:

- As-built architecture diagram

- Deployed resource inventory

- Operational runbooks

- Security review checklist

These are usually written when someone breaks production.

Here, they shipped with the platform.

The Whole Platform Took 13 Hours, Not Two Weeks

Here’s where the time actually went:

- Agent interaction: 3 hours

- Review and validation: 4 hours

- Testing and refinement: 6 hours

Total: 13 hours

Time reduction: 85%

That’s not because the agents were “fast typists.”

It’s because rework was eliminated early.

The Quality Was Better Than a Typical First Draft

Measured outcomes:

- Zero naming convention violations

- Full tagging coverage from day one

- Azure Verified Modules used for 63% of resources

- 92% Well-Architected Framework alignment

This wasn’t a prototype.

It was production-ready infrastructure with audit-friendly documentation.

Final Thought: The Win Wasn’t Speed. It Was Discipline.

Agentic InfraOps didn’t replace engineering judgment.

It enforced it: consistently, early, and without fatigue.

If you’re thinking about AI in infrastructure as “faster code,” you’re missing the point.

The real leverage is forcing good architecture habits before humans have time to cut corners.

That’s what made this work.

—

Mark Valman

Cloud Solution Architect @ 2bcloud

Want to learn more about my GenAI experiments?

Contact me [email protected]