TL;DR

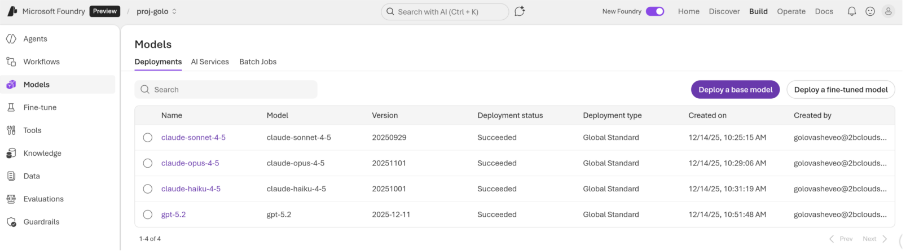

Microsoft just added Anthropic’s Claude models to Microsoft Foundry.

Instead of reading the press release, I ran a mini-benchmark to see how Claude Opus, Sonnet, and Haiku actually perform with real Python tasks and stdin/stdout workflows.

The results will surprise you.

Opus was the most complete, Haiku the fastest (and chattiest), and instruction-following was the weak point across the board.

If you’re thinking about using Claude in production, read this first.

Skip the Hype: What Engineers Actually Need to Know

Microsoft’s announcement “Introducing Anthropic’s Claude models in Microsoft Foundry”.

This means that Claude models are now available inside an Azure-native surface.

Sounds promising, but I’m not interested in marketing press releases.

I care about whether this actually works in pipelines today.

As an Azure engineer, I translate announcements like this into simpler questions:

- Where’s the endpoint?

- Which SDK do I use?

- Will the model behave when my pipeline feeds it stdin instead of poetry prompts?

So instead of opening a slide deck, I opened a terminal.

.

Why I Built a Mini Benchmark Instead of Reading the Docs

When new models land in a production environment, the first question isn’t “Which model is smartest?” It’s:

- How fast is it?

- Does it follow instructions?

- Will it break our workflows?

I didn’t want another big benchmarking suite. I needed a quick, cheap, repeatable way to smoke test real behavior. That’s where Mini-5 comes in.

The Mini-5: Five Python Tasks That Expose Real Integration Flaws

These aren’t clever coding challenges. They’re boring on purpose.

Here’s what I threw at the models:

- p95(values): Percentile logic with edge-case ambiguity

- unique(seq): Deduplicate while preserving order

- Read JSON from stdin ({service, latency}), return average latency per service

- top3(data): Return three keys with the highest values

- Read CSV from stdin, return structured JSON

These tasks test ambiguity, instruction compliance, data plumbing, and output discipline, all critical in real workflows.

The Test Setup: Same Settings, No Shortcuts

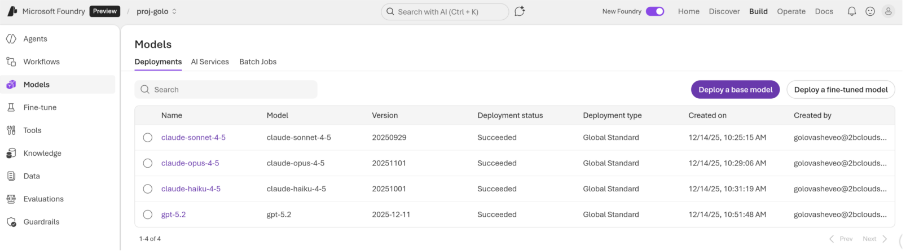

I ran all three Claude variants in Microsoft Foundry:

- Claude Haiku 4.5

- Claude Sonnet 4.5

- Claude Opus 4.5

With these consistent settings:

- Temperature: 0

- Streaming: enabled

- Wall-clock execution time measured

- Input/output tokens recorded

- Model token limits respected

Everything was saved as structured JSON and summarized in Markdown.

GPT-5.2 as the Judge: Because Output Discipline Matters

You can’t trust latency alone. A fast model that adds Markdown, commentary, or logging noise will break a production pipeline.

To score consistently, I used GPT-5.2 as an automated judge:

- Analytical summary per task

- Scores for correctness, instruction-following, code quality, robustness

- Normalized data for reporting

This kept evaluation deterministic and machine-readable.

Results: What Each Claude Model Did Right (and Wrong)

Benchmark Results

| Model | Time (s) | Input Tokens | Output Tokens | Total Tokens |

| claude-sonnet-4-5 | 7.754 | 183 | 429 | 612 |

| claude-opus-4-5 | 8.226 | 183 | 528 | 711 |

| claude-haiku-4-5 | 5.404 | 183 | 903 | 1086 |

What stands out:

- Haiku is the fastest, but produces the most output tokens.

- Sonnet sits in the middle, both in latency and verbosity.

- Opus is the slowest, but generates the most complete solution shapes.

Evaluator Results (GPT-5.2)

| Model | Correctness | Instructions | Code Quality | Robustness | Summary |

| Sonnet 4.5 | 2 | 2 | 3 | 2 | Missing executable stdin path and incorrect p95 semantics. |

| Opus 4.5 | 4 | 2 | 4 | 3 | Functionally complete, but breaks “code only” constraints. |

| Haiku 4.5 | 2 | 1 | 3 | 2 | Incorrect p95 and test output printed to stdout. |

What These Results Really Mean for Engineering Teams

This isn’t a leaderboard, it’s a reality check:

- Instruction-following is the weak point across the board. That’s the failure mode that breaks pipelines.

- Haiku is fast but over-generates without tight prompt control.

- Sonnet looks reasonable but isn’t safe for automation without orchestration.

- Opus gives the best structure, but you still need output constraints.

When the model output is executed directly, “code only” isn’t a suggestion. It’s an engineering contract.

Pricing Reality Check: Easy to Verify in Foundry

Pricing is available directly in Microsoft Foundry:

Just pick a Claude model, open the pricing tab, and compare. You can test behavior and cost side-by-side in seconds.

What This Test Is (and Isn’t)

Mini-5 is:

- A quick, low-cost smoke test for practical model behavior

- Designed to reveal obvious integration risks early

Mini-5 is not:

- A comprehensive benchmark

- A replacement for full integration testing

- A substitute for code review

Use it to inform early decisions, not to finalize them.

Final Takeaway: Don’t Wait for the Deck. Open a Terminal.

Claude models are now in Azure Foundry, which means faster testing using the tooling and governance you’re already using.

When a new model hits your cloud, don’t waste time debating.

- Run a quick benchmark

- Measure latency

- Count tokens

- Inspect outputs

- Apply consistent evaluation

One fast test won’t answer every question. But it will show you whether it’s worth asking the next one.

____________________________________

— Looking to explore further?

Check out the full Microsoft announcement

— Need help running your own tests?

Evgeniy Golovashev, 2bcloud

Solution Architect