Imagine visiting an e-commerce site that instantly understands your preferences, offering tailored product recommendations from the first click. For our client, this vision was about creating a seamless, engaging experience for new users by providing immediate, personalized suggestions. Using Azure ML Studio, we turned this vision into reality by solving key challenges like the “cold start problem” and building a robust recommendation system. Here’s how we made it happen.

Defining the Challenge: The Cold Start Problem

The primary goal of this project was to implement a recommendation system for new users, providing tailored suggestions that enhance the user experience. Personalized recommendations help users discover products they’re likely to love, boosting customer satisfaction and engagement. However, the challenge lay in the lack of historical data for these new users, a scenario commonly referred to as the “cold start problem.”

Without sufficient interaction history, it’s difficult to align product recommendations with user preferences. Solving this problem was at the heart of our solution.

Leveraging Azure ML Studio: Why It’s Ideal for E-Commerce

We chose Azure ML Studio as the platform for this project for several reasons:

- Ease of Use: Its no-code/low-code environment made it accessible to our client, who had limited experience with machine learning.

- Seamless Integration: Azure ML Studio integrates natively with Azure services like Blob Storage and Azure Data Factory, enabling automated data pipelines for continuous model training.

- Scalability: The platform’s flexibility allows for smooth scaling as the client’s needs evolve.

From Data to Insights: Preparing for Personalization

Tackling the Cold Start Problem

The infamous “cold start problem”—a hurdle for any recommendation system—arises when there’s insufficient data on new users. To tackle this, we turned to creativity and data-driven strategy. A short, engaging quiz was integrated into the e-commerce website, asking users about their skin type, age, beauty concerns, and more. This not only solved the data gap but also offered a seamless, interactive entry point for users.

During the proof-of-concept (POC) phase, we collected quiz data over five weeks, pairing it with user purchase data. This effort resulted in a dataset of around 100,000 records—a solid foundation for training our initial model.

Data Preparation

The collected data was uploaded to Blob Storage in a simple CSV format for the POC. The dataset included user features (e.g., age, skin type) and item features (e.g., product type, attributes). While everything was consolidated in one file for simplicity, a mature implementation would use dedicated feature stores to enhance flexibility and scalability

Training the Model with Azure ML Studio

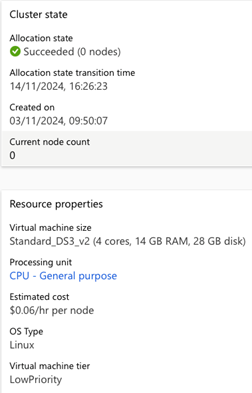

Choosing the Right Compute Resources

We selected a compute cluster with the Standard_DS3_v2 instance type (4 cores, 14 GB RAM, 28 GB disk). This setup offered an optimal balance of CPU, memory, and cost-effectiveness. The pay-as-you-go model allowed us to train the model efficiently while keeping costs under control.

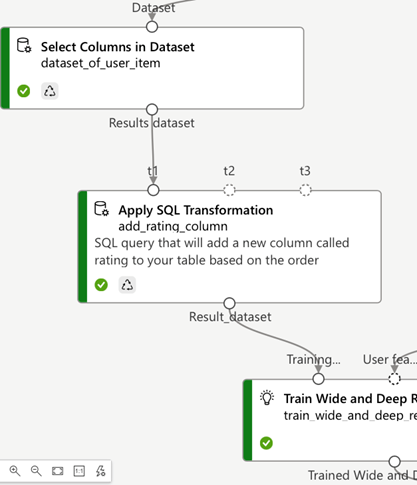

Selecting and Training the Model

Azure ML Studio offers built-in recommendation models, such as Wide and Deep and Collaborative Filtering. For this project, we chose the Wide and Deep model, which combines the strengths of a wide linear model (memorized relationships) and a deep neural network (generalizable patterns).

One challenge was that the Wide and Deep model expects input in a user-item-rating format, but we lacked explicit rating data. To address this, we created a synthetic rating system based on order frequency:

- Frequency of 1 ⇒ Rating of 1

- Frequency of 2 ⇒ Rating of 2

- Frequency of 3 ⇒ Rating of 3

- Frequency ≥ 4 ⇒ Rating of 4

This approach allowed us to leverage interaction data to simulate user preferences.

Configuring Hyperparameters

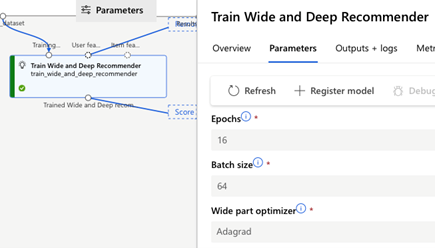

Hyperparameter tuning is a critical step in optimizing model performance, as it determines how well the algorithm learns from the data. For the Wide and Deep model, we selected the following parameters after iterative experimentation:

- Epochs: 16, allowing the model to pass through the dataset multiple times to capture deeper insights.

- Batch size: 64, balancing computation efficiency and model updates.

- Wide part optimizer: Adagrad, chosen for its adaptive learning rate capabilities that work well with sparse data.

To refine these parameters, we employed grid search and analyzed validation performance after each adjustment. This systematic approach ensured the model achieved a balance between accuracy and computational efficiency while minimizing overfitting and underfitting.

Model Evaluation

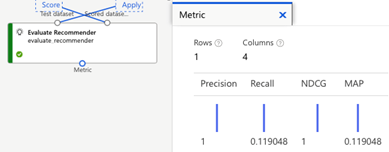

We made a deliberate choice to only recommend five items to each user. Because of this strict limit, the model’s high precision also brings down the recall and MAP.High Precision (1.0) but Low Recall (0.119)

Our model is extremely conservative: it only recommends an item if it’s almost certain it’s relevant. This yields very few (but very accurate) recommendations—hence the perfect precision of 1.0. However, this comes with a low recall of 0.119, meaning we’re missing out on a significant portion of relevant items. Perfect NDCG (1.0) but Low MAP (0.119)

NDCG at 1.0 means every recommended item is ranked in the ideal position. But since the model recommends so few items, its overall impact (considering both quantity and relevance) is limited, resulting in a low MAP of 0.119.

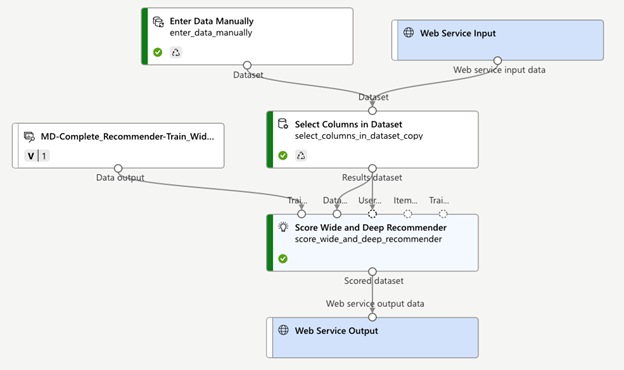

Deployment: Delivering Real-Time Recommendations

Once satisfied with the model’s performance, we deployed it using Azure ML Studio’s real-time endpoint. The compute environment’s scalability ensures instant recommendations as users interact with the site. This real-time feedback loop creates a seamless and engaging customer experience

Looking Ahead: Continuous Improvement

Machine learning is a journey of iteration and discovery, and every project teaches us new lessons. Looking ahead, we’re excited to refine and expand the capabilities of this system.

Next steps include:

- Incorporating new user insights: Features like geolocation, ad campaign source, and device type will provide richer context, enabling even more precise and personalized recommendations.

- Continuous learning: Retraining the model with new data will help capture evolving user behaviors and preferences, ensuring recommendations stay relevant over time.

- Optimizing hyperparameters: Regular tuning and experimentation with hyperparameters will further enhance model performance and responsiveness.

This is more than just a system; it’s a living, evolving solution that adapts as the business and its customers grow. Our ultimate goal is to deliver an experience that feels uniquely tailored to every user, every time they interact with the platform. It’s about creating connections, fostering trust, and driving long-term engagement through the power of personalization